To really get under the hood of your site's SEO performance, you have to go beyond the usual analytics dashboards. The goal is to see the raw, unfiltered truth of how search engines actually interact with your website. This means diving into your server logs, spotting exactly what the bots are up to, and finding those pesky technical issues that most other tools completely miss.

Why Your Server Logs Are an SEO Goldmine

Let's be real—server logs aren't pretty. They're just massive, often cryptic text files. But within that raw data lies the absolute truth of every single request made to your server. While tools like Google Search Console give you a nice, clean summary, your server logs are the ground-level view you can't get anywhere else.

Think of it this way: Google Search Console is the highlight reel. Your server logs are the full, unedited game footage, capturing every single play, fumble, and touchdown. This complete record is where the truly valuable insights are hiding.

The Unfiltered Truth of Search Bot Behavior

Your server logs show you precisely what search engine bots—like the infamous Googlebot—are doing on your site. I'm talking about every page they visit, every image they request, and every single error they hit along the way. With this kind of detailed data, you can finally get concrete answers to critical SEO questions.

Here's what you can uncover:

- Crawl Budget Optimization: Is Googlebot getting lost and wasting its precious time on pages you don't care about, like old archives or faceted navigation URLs? Your logs will show you exactly where this wasted effort is happening, so you can block those sections and redirect bots toward your most important pages.

- Orphan Page Discovery: Ever wonder if you have important pages getting search bot traffic but no internal links pointing to them? These "orphan pages" are often invisible to standard site crawlers, but they stick out like a sore thumb in log files. Finding them is like discovering a pile of low-hanging SEO fruit.

- Technical Error Diagnosis: You can see every single 4xx and 5xx status code that Googlebot encounters, often long before these errors even show up in other reporting tools. This gives you a massive head start on fixing broken links and server problems before they have a chance to hurt your rankings.

When you start analyzing your server logs, you stop making educated guesses about your technical SEO. Instead, you start making decisions based on hard data straight from the source. It’s hands-down the most accurate way to take your site’s technical pulse.

Being able to dig into this level of detail is becoming more critical every day. The total amount of data created globally is on track to hit an incredible 149 zettabytes, and that number is projected to soar past 394 zettabytes by 2028. This explosion of information makes log file analysis a fundamental skill for anyone serious about managing their piece of the web. You can read more about this trend in Statista's research on global data creation.

Getting and Preparing Your Log Data for Analysis

Before you can pull any insights from a log file, you first have to get your hands on it. This initial step can be surprisingly tricky, as the location of your server logs depends entirely on your hosting setup. If you’re running a dedicated server or a VPS, you probably have direct root access to grab them. For everyone else on shared hosting, it usually means digging through a cPanel or a similar dashboard to find a section like "Raw Access Logs."

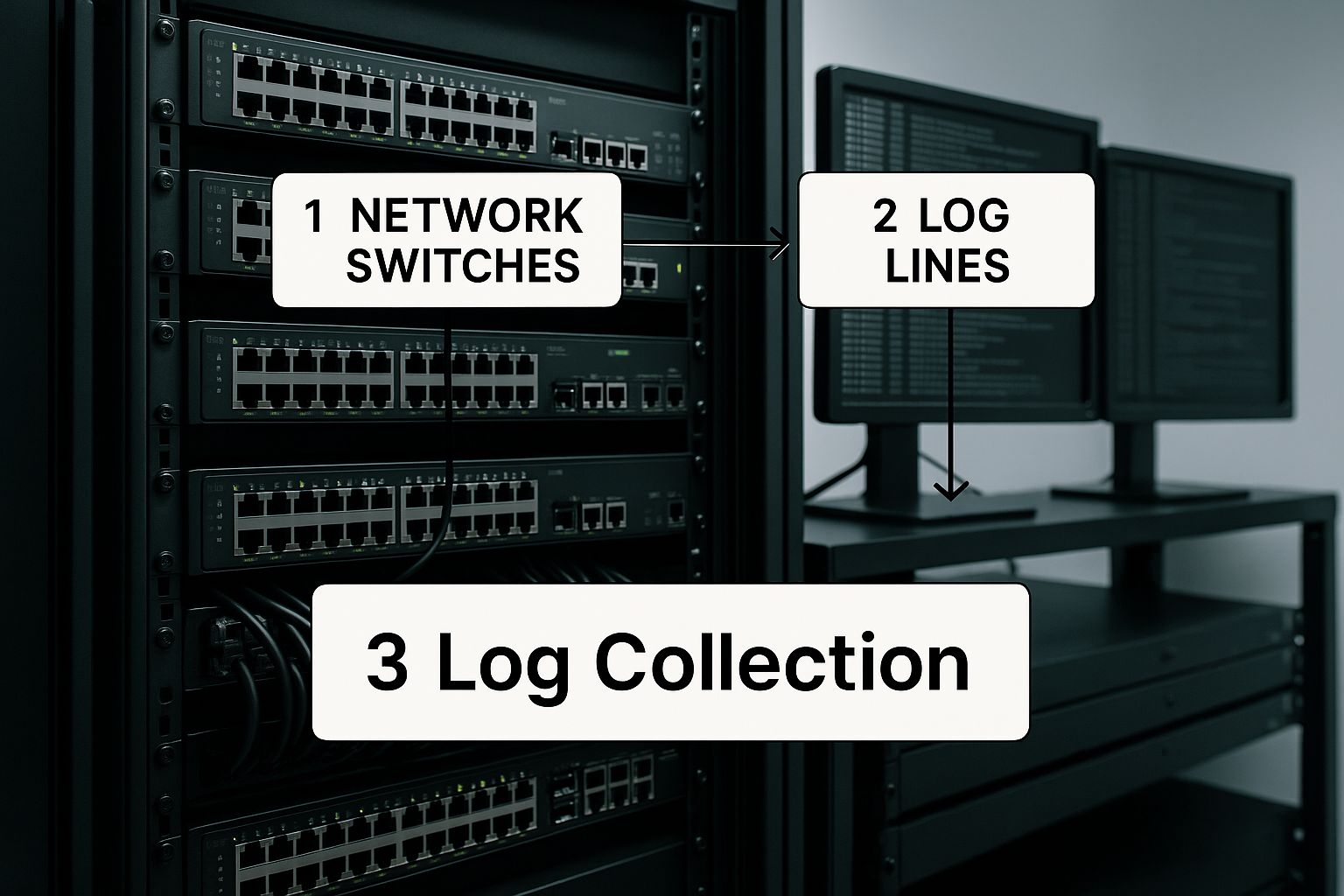

Once you find them, you can typically download the logs, which often come as compressed .gz files. Unzip them, and you'll find the raw text file inside – that’s the gold you're after. This whole process, from collection to analysis, is a journey in itself.

As the visual shows, "Log Collection" is the foundational step. Without a reliable way to get this data, the rest of your analysis is dead in the water.

Demystifying Common Log Formats

When you first open a raw log file, it can look like complete gibberish. Don't worry, there's a method to the madness. Most web servers, whether it's Apache or Nginx, stick to a "Common Log Format" or a slightly more detailed "Combined Log Format." Each piece of information in a single line tells you something important.

Here’s a quick breakdown of what you're actually looking at:

- IP Address: The address of the client (or bot) that made the request.

- Timestamp: The exact date and time the request happened.

- Request Method & URL: What file was requested, like

GET /my-awesome-blog-post/. - Status Code: The server's response (200 for a successful request, 404 for a page not found, etc.).

- User-Agent: The signature of the browser or bot, like

Googlebot.

Getting comfortable with these fields is the key to unlocking everything else. The user-agent, for example, is exactly how you’ll separate search engine crawlers from regular human visitors.

Understanding Key Status Codes

The HTTP status code is one of the most vital pieces of data in your logs. It’s a direct message from your server about the outcome of a request. To make sense of your log files, you need to know what these codes mean for your SEO.

Common Log File Status Codes and Their SEO Meanings

| Status Code | Meaning | SEO Implication |

|---|---|---|

| 200 OK | The request was successful. | Ideal. This means search engines can access and index your content without issues. |

| 301 Moved Permanently | The page has permanently moved to a new URL. | Good for SEO when used correctly. Passes link equity to the new URL, but chains of 301s can waste crawl budget. |

| 302 Found (or Temporary Redirect) | The page has temporarily moved. | Use with caution. Signals to search engines that the original URL will return, so link equity isn't passed as strongly. |

| 403 Forbidden | The server understood the request but refuses to authorize it. | A problem. If Googlebot gets a 403, it can't crawl the page, which means it won't get indexed. |

| 404 Not Found | The server can't find the requested page. | A high number of 404s can waste crawl budget and lead to a poor user experience. It's a signal to fix broken links. |

| 500 Internal Server Error | A generic error message; something went wrong on the server. | Critical to fix. A 500 error stops Googlebot in its tracks and can cause pages to be de-indexed if not resolved quickly. |

| 503 Service Unavailable | The server is temporarily down for maintenance or is overloaded. | Acceptable for planned maintenance if you use a Retry-After header. If it's chronic, it signals a major hosting or site health issue. |

This table isn't exhaustive, but it covers the main players you'll encounter. Spotting patterns in these codes—like a sudden spike in 404s or persistent 503s—is your first clue that something needs immediate attention.

Preparing Your Data for Actionable Insights

Raw log data is incredibly noisy. It’s full of requests from your own team's IP addresses, uptime monitoring services, and a whole zoo of bots that have nothing to do with your SEO performance. Filtering out this noise is non-negotiable for a clean, accurate analysis.

The real power move is to enrich your log data. Simply looking at bot hits isn't enough. You need to know what those crawled URLs are. Are they important, indexable pages? Or are they junk URLs with tracking parameters?

This is where I find combining data sources becomes a complete game-changer. My go-to process involves merging the log file data with a fresh site crawl from a tool like Screaming Frog. By matching the URLs from the logs to the URLs in your crawl report, you can add a layer of crucial context.

For instance, you can suddenly see if Googlebot is spending its time on pages that are:

- Non-indexable (

noindextag) - Blocked by your robots.txt file

- Canonicalized to a different URL

- Completely missing from your site architecture (orphan pages)

This merged dataset transforms a simple list of server hits into a powerful diagnostic tool. You can pinpoint exactly how much crawl budget is being wasted on non-essential pages, an insight that directly impacts how well your most important content performs. Understanding this connection is just as fundamental to good SEO as knowing how to check website ranking to measure your progress. By following this preparation process, you move from just having raw data to holding a truly strategic asset, ready for deep-dive analysis.

Picking the Right Log File Analysis Tool

Let’s be honest: trying to sift through millions of log file entries by hand is a recipe for a headache. It's simply not feasible. To do this right, you need a specialized tool to slice, dice, and make sense of all that raw data. The best one for you really boils down to your budget, how comfortable you are with technical tools, and the sheer size of the website you’re dealing with.

The market for these tools is booming, which tells you just how critical log file analysis has become. The global log management market was valued at around $2.51 billion and is projected to climb to $2.87 billion. This growth is fueled by smarter AI and a real demand for tools that don't require a computer science degree to operate. If you want to dig deeper into these numbers, you can explore more insights on the log management market from The Business Research Company. More options are great, but it also means you have to choose wisely.

Desktop Software for Hands-On Analysis

For most SEOs I know—consultants, agency folks, and in-house teams at small to mid-sized companies—a desktop application is the sweet spot. These tools pack a serious punch without the intimidating learning curve of command-line interfaces or the monthly hit of a big cloud platform.

A classic in this space is the Screaming Frog SEO Log File Analyser. It's a go-to for a reason. You get a clean, visual interface where you can upload your logs, marry them with a fresh site crawl, and start spotting problems almost instantly.

This is what you'd typically see inside the tool—a clear breakdown of verified bot hits and server response codes over a specific period.

It’s all about turning a mountain of text into easy-to-read charts. You can immediately see which bots are hitting your site and if you have a spike in nasty 404s or other errors.

The real magic of a tool like Screaming Frog is how it merges log data with crawl data. Suddenly, you have context. You can see if Googlebot is wasting its precious crawl budget on pages that are non-indexable, pointing to another URL via a canonical, or completely orphaned.

Command-Line and Cloud-Based Platforms

When you're working on enterprise-level sites that spit out gigabytes of log data every single day, your trusty desktop app might start to choke. That’s when you need to bring in the heavy artillery.

- Command-Line Utilities: If you're comfortable in a terminal, free tools like

grep,awk, andsed(standard on Linux/macOS) give you incredible power. You can filter and manipulate enormous files in a blink, but it absolutely requires some shell scripting know-how. - Cloud-Based Platforms: Services like the Semrush Log File Analyzer, Datadog, or custom-built solutions on platforms like AWS or Microsoft Fabric are built for this kind of scale. They ingest huge data streams in near real-time and offer sophisticated dashboards. The trade-off? They come with a hefty subscription fee.

Ultimately, picking your tool is the first strategic move you'll make in this process. My advice? Start with what feels accessible. If you’re just getting your feet wet, a user-friendly desktop app is the perfect way to learn the fundamentals and get some quick wins. As your projects get bigger and more complex, you can always graduate to the more powerful, scalable options.

Finding Actionable Insights in Your Log Data

Alright, you’ve wrangled your log data and loaded it into your tool. This is where the real fun starts. You're about to shift from data janitor to data detective, turning raw server logs into a concrete SEO strategy.

The trick is to know what questions to ask. You're not just scanning for errors; you're hunting for patterns. When you analyze log file data, you're getting a candid look at how search engine bots behave on your site. This isn't a simulation or a guess—it's direct evidence that helps you make sharp, effective changes.

Pinpoint Crawl Budget Waste

One of the first and most impactful things you can uncover is crawl budget waste. Every site gets a finite amount of Googlebot's attention, and you need to make sure it's spent on your most important pages, not junk URLs.

You’ll often be surprised at how much of your budget gets soaked up by low-value pages. The usual suspects tend to be:

- Faceted Navigation: Think of all those URLs with parameters like

?color=blue&size=large. They can create thousands of near-duplicate pages that eat up crawl budget. - Old Tag or Archive Pages: These are often thin content pages that provide very little value but still get crawled regularly.

- Dev or Staging URLs: It's more common than you'd think for internal links to mistakenly point to a non-production version of your site.

- Redirect Chains: A series of 301s forces Googlebot to make multiple hops to get to the final page, wasting time and resources on every request.

By isolating these URL patterns in your logs, you can see just how many hits they're getting. Armed with that data, you can confidently go in and fix the problem, whether that means updating robots.txt, adding nofollow attributes, or using canonical tags to point bots to the right place.

Uncover Critical Errors and Technical Issues

Sure, tools like Google Search Console show you errors, but they're often delayed and only represent a sample of what’s really happening. Your server logs give you the full, unfiltered story in real-time.

When you filter your logs to show only bot hits that received a 4xx or 5xx status code, you're essentially creating an urgent to-do list. Did you just do a site migration? A sudden spike in 404s will jump right out at you. A nagging 503 error points to a server that's struggling under the load and needs attention before Google starts dropping your pages from the index.

The real power here is context. By cross-referencing these error URLs with your site crawl data, you can see if the broken pages have high authority or receive significant internal links. This helps you prioritize which fixes will have the biggest impact on your site's health and performance.

Beyond just SEO, log analysis can also tip you off to potential security problems. For a deeper dive into protecting your site, a detailed WordPress security checklist is a great resource.

Discover Important Orphan Pages

Orphan pages are a peculiar problem. They exist on your site, but no internal links point to them, so standard website crawlers will almost always miss them. But search engines can still find them through old sitemaps or backlinks from other websites.

Your log files are the only place to find definitive proof of these pages. The process is straightforward: compare the list of URLs crawled by Googlebot with the list of URLs from your own site crawl. The URLs that show up in the logs but not your crawl are your orphans.

Here’s a real-world example:

Imagine you find /important-service-guide getting consistent hits from Googlebot, but it's completely absent from your Screaming Frog crawl. That’s a classic orphan page. Google clearly thinks it’s important enough to keep checking, but without any internal links, it's getting zero link equity from the rest of your site and is probably underperforming.

The fix is simple and incredibly effective. Find relevant, high-authority pages on your site and add internal links pointing to this guide. Just like that, you’ve reconnected it to your site architecture and given its SEO potential a massive boost.

Turning Your Analysis into SEO Wins

Digging through log files is fascinating, but spotting the problems is only half the job. The real magic happens when you translate those technical breadcrumbs into a solid SEO roadmap that actually gets results.

You’ve done the hard part and unearthed the issues. Now, it’s time to build a smart, prioritized action plan. This isn't about creating a laundry list of chores; it's about turning raw data into concrete tasks that will genuinely improve your site's health and search visibility.

Creating a Prioritized Action Plan

Let's be realistic—your log analysis probably turned up a mix of everything from minor quirks to glaring, red-flag errors. The key is not to get overwhelmed and try to fix it all at once. You need to prioritize. I always recommend sorting tasks based on their potential SEO impact versus the effort required to fix them.

For example, say you found a ton of crawl budget being wasted on URLs with messy parameters. That’s a classic, high-impact problem. Your action plan could look something like this:

- Implement

rel="canonical"tags: This is your first line of defense to point all that SEO value back to the main URL. - Update your

robots.txtfile: For a more forceful approach, you can block crawlers from even looking at those low-value pages. - Configure URL parameter handling in Google Search Console: This tells Google directly how to handle those parameters, giving you more control.

What if your logs are full of search bots hitting 404 error pages? That's another urgent fix. Pull together a list of those broken URLs and get to work setting up 301 redirects, especially for any that have backlinks or significant bot traffic. This simple step reclaims lost link equity and tidies up the pathways for search engines.

The real shift in mindset is moving from a "list of problems" to a "list of solutions." Every issue you find in your log files should have a clear, actionable next step tied to it. This is how a technical audit becomes a strategic weapon.

Integrating Orphan Pages and Fixing Performance

And what about those orphan pages you found? Think of them as hidden treasure. The solution here is to weave them back into your site's fabric with a smart internal linking strategy. Find relevant, authoritative pages on your site and add natural, contextual links that point to these forgotten assets. Doing this allows authority to flow and gives them a real chance to start ranking.

Log files are also a goldmine for spotting performance drags. They can point you to the exact files or scripts that are slowing things down, giving you a clear path for effective website speed optimization—a critical factor for both users and search engines.

The sheer volume of this data has created a booming market for log management software. The Asia Pacific region, in particular, is set to see major growth. It's a competitive space, with big names like Cisco and IBM snapping up smaller companies to bolster their tools. You can read more about the log management market trends from GrandViewResearch if you're curious about the industry landscape.

Finally, remember to track everything. To get buy-in from your team or clients, you have to show the value of all this technical work. Present the "before" data—like the exact amount of wasted crawl budget or the number of bot errors—and follow up with the "after" results. If you need a framework for this, there's some excellent advice on how to measure SEO success that can help you prove your impact. This is how you turn a deep technical dive into a clear business win.

Answering Your Log File Analysis Questions

If you're new to server log analysis, it's completely normal to have a few questions. Let's be honest, staring at raw log files can feel a bit intimidating at first. But once you get the hang of it, you'll see it's less about technical wizardry and more about unlocking a new layer of SEO intelligence.

Here are some of the most common questions we get, along with some straight-to-the-point answers from our experience.

How Often Should I Actually Do This?

This is probably the number one question people ask. For most websites, running a log file analysis once a quarter is a great rhythm. It's often enough to spot problems before they fester but not so frequent that it becomes a chore.

However, if you're running a massive e-commerce site with thousands of products or a news website that publishes dozens of articles a day, you really should be doing this monthly. With that much change happening, a lot can go wrong in a short amount of time.

Pro Tip: The absolute most important time to check your logs is right after a site migration, a major redesign, or any big change to your URL structure. This is your chance to get an immediate look at how crawlers are reacting and fix any glaring errors before they do real damage to your rankings.

Do I Need to Be a Super-Technical Coder?

Absolutely not. While having developer skills is never a bad thing, you don't need them to get incredible value from your logs. The days of needing to be a command-line expert are long gone.

Modern tools have thankfully made log analysis much more accessible. Platforms like the Screaming Frog Log File Analyser or the features built into Semrush are designed specifically for SEO professionals, not server admins. They turn messy log files into clear, visual dashboards.

These tools instantly show you things like:

- Sudden increases in 4xx or 5xx server errors

- How crawl budget is split between Googlebot Desktop and Mobile

- Which URLs are getting all the attention from bots (and which are being ignored)

Essentially, they do the technical heavy lifting, so you can focus on what the data actually means for your strategy.

What's the Biggest Mistake to Avoid?

The single biggest pitfall is analyzing your log data in a vacuum. A log file tells you what search bots are crawling, but it doesn't tell you if they're crawling the right things.

For the insights to be truly powerful, you have to cross-reference your log data with a full site crawl from a tool like Screaming Frog or Sitebulb. This combination is where the magic happens. It immediately shows you if Google is wasting its precious crawl budget on non-indexable pages, redirect chains, or low-value parameter URLs instead of your most important content.

When you're ready to share your findings, this combined data is what makes your recommendations undeniable. We talk a lot more about how to frame this kind of data in our guide on how to create SEO reports that stakeholders will actually understand and act on.

Ready to stop guessing and start knowing exactly how search engines see your site? That's Rank provides the essential tools you need to track rankings, audit your site's health, and monitor competitors—all in one clear dashboard. Start your free plan today and turn data into real SEO growth.